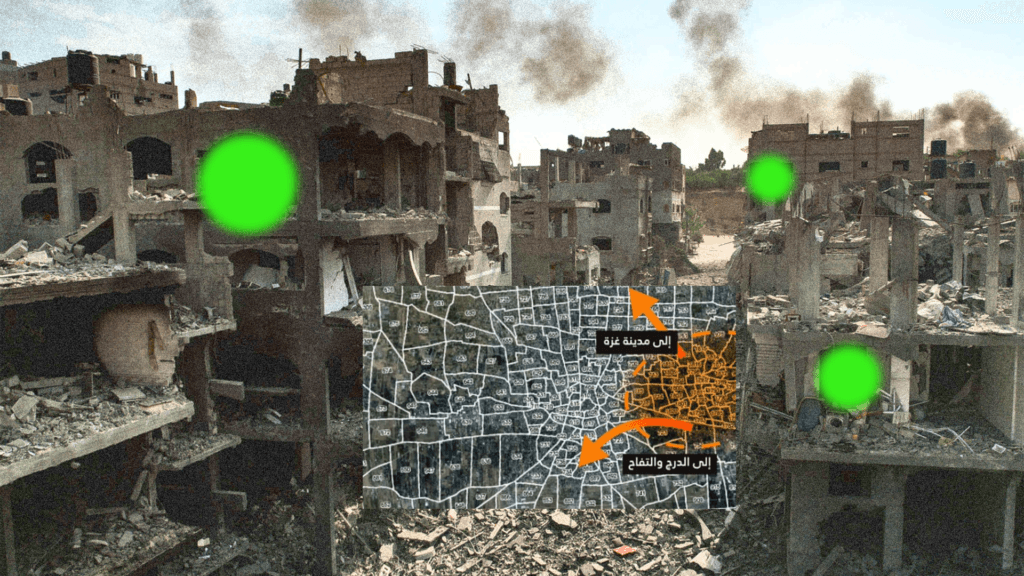

The Gaza conflict has been thrust into global focus with the controversial use of advanced artificial intelligence (AI) by the Israel Defense Forces (IDF). This unprecedented application of AI tools, such as the Habsora system, has significantly altered the dynamics of modern warfare, raising urgent questions about accountability and the ethical implications of automated decision-making. Has the integration of AI into military strategy contributed to the staggering loss of life?

How AI Shapes Modern Warfare

The IDF’s use of AI is rooted in a decade-long initiative to modernize intelligence operations. The Habsora system, colloquially known as “The Gospel,” exemplifies this transformation. Designed to rapidly process vast amounts of data, it identifies potential targets with unprecedented speed and accuracy. This system draws on a meticulously curated database of addresses, infrastructure details, and tunnel networks in Gaza. By automating such processes, the IDF can maintain an aggressive operational tempo.

However, speed comes at a cost. Critics argue that the system prioritizes efficiency over scrutiny, potentially increasing the risk of erroneous targeting. Unlike human analysts who might deliberate over the broader consequences of their decisions, AI-driven systems rely on predefined algorithms that lack nuanced ethical considerations.

The Escalation of Civilian Casualties

The conflict has claimed over 45,000 lives, with more than half of the casualties reportedly women and children, according to Gaza’s Health Ministry. While the IDF disputes the accuracy of these figures, the toll highlights a growing concern: the acceptable threshold for civilian harm appears to have shifted. Soldiers and analysts familiar with IDF practices suggest that automation has enabled the rapid generation of low-level targets, including individuals suspected of involvement in the October 7 attacks.

This shift has sparked intense debate within the military’s upper echelons. Some commanders worry that over-reliance on AI tools undermines traditional intelligence processes, while others see these technologies as indispensable in a fast-paced conflict environment. The crux of the issue lies in whether these tools—designed to minimize human oversight—are inadvertently escalating the violence.

The Ethical Dilemma

Integrating AI into warfare raises profound ethical questions. Can machines accurately distinguish between combatants and civilians? The algorithms underpinning tools like Habsora are trained on historical data, but their ability to adapt to evolving battlefield dynamics remains limited. Human oversight, though still present, is often reduced to rubber-stamping AI-generated recommendations.

Furthermore, the opacity of machine-learning systems compounds the issue. Military officials may not fully understand how an AI arrives at its conclusions, making accountability elusive. This “black box” problem has fueled concerns about whether decisions driven by AI are sufficiently scrutinized.

RELATED:

AI’s Role in Shaping Perception

Beyond its tactical applications, AI plays a strategic role in shaping public perception. Sophisticated propaganda campaigns employ AI-generated content to sway opinions and justify military actions. By analyzing social media trends and crafting targeted narratives, these tools bolster support for contentious operations.

However, this raises another ethical quandary: does the use of AI in psychological operations blur the line between information dissemination and manipulation? Critics argue that such tactics risk eroding public trust, both domestically and internationally.

Lessons from the Gaza Conflict

The Gaza war underscores the dual-edged nature of AI in military applications. On one hand, these technologies promise greater precision and efficiency. On the other, they introduce new risks, from algorithmic biases to the dehumanization of decision-making. As militaries worldwide explore similar capabilities, the Gaza conflict serves as a cautionary tale.

To mitigate these risks, experts recommend stricter oversight mechanisms and clearer ethical guidelines. Ensuring that AI systems remain tools—not decision-makers—is crucial to preserving accountability. Moreover, fostering international dialogue on the regulation of military AI could help establish shared norms and prevent misuse.

Conclusion

The integration of AI into warfare is reshaping the battlefield, offering both opportunities and challenges. While tools like Habsora demonstrate the potential of AI to enhance military operations, their use in Gaza highlights the human cost of unbridled technological adoption. As the death toll continues to rise, the world must grapple with the ethical implications of delegating life-and-death decisions to machines. Only by balancing innovation with accountability can we hope to navigate this complex frontier responsibly.